The Importance of Clean & Accurate Bidstream Data

Published by Silvia Abreu on

Normalization plays a vital role in ensuring clean, accurate data flows through the bidstream — empowering media owners to better monetize inventory and first-party assets.

Imagine you’re in the market for a new car.

You conduct some online research to get started, meticulously combing through various options. The website you’re using has plenty of filters to narrow down your choices. Do you want a new car? A used car? What make? What model? A convertible? A minivan? What’s your budget? Do you want a specific color? What about MPG standards and electric options? The decisions and filtering go on...and on.

You narrow your search down from hundreds of options to a select few, then figure out where it’s most convenient to purchase your top choice. Maybe you order online and have it delivered to your home; or maybe you head to a dealer for a test drive before dotting your I’s and crossing your T’s.

Before you know it, you’re driving around happily in your new car.

Aside from some potential haggling with the dealer, the search process from start to finish was relatively seamless. You had criteria in mind. That criteria was met by accurate, readily-available information. You filtered and narrowed your search, until you found an ideal option or two.

At the core of what made this process so seamless was data — and more specifically, clean and accurate data. Data on the make and model, the color, the car type, and so on. Data that, for the most part, you knew could be trusted and verified as you worked toward your decision.

Having robust data at your fingertips simplified the search and targeting process, allowing you to efficiently find and buy what you were looking for. And on the other side of your “consumer journey”, the auto manufacturer and dealer (e.g. the sellers) made their inventory more visible and attractive by infusing key vehicle metadata into your search and qualifying process. This ensured any relevant inventory was likely to be included in your consideration set and, potentially, your final decision.

It is this same core economic principle — marketplace transparency drives greater efficiency, trading volume, and satisfaction — that underpins many programmatic marketplaces today, especially those that are transacted via real-time bidding protocols.

For our team at Beachfront, this principle informs how we ingest and process key metadata from our publisher and programmer partners, and share it onto media buyers and advertisers to help them qualify ad opportunities. Understanding the value of clean and accurate metadata, we present ad opportunities to the buy-side with all of the context and information they need to consider the available inventory in question. This dressing up of inventory requires the right blend of art and data science, but it is absolutely critical in helping publishers and programmers unlock stronger yield and revenue — which is core to our team’s mission and focus.

Let’s jump in to unpack this a bit more…

The Role of Data in Programmatic Marketplaces.

Within the programmatic advertising ecosystem, media buyers are increasingly demanding greater transparency into the inventory they are purchasing, while media sellers are seeking better tools for sharing and monetizing their valuable first-party data (and metadata).

This is especially true within openRTB environments, which include real-time bidding and auctioning. In order to make qualifying metadata available to prospective buyers as part of real-time auctions, media sellers configure and/or populate various object specifications to enrich and enhance their bid requests. Included are items like the device being used, the genre of the content, the app in which the content is consumed, and so on.

Sharing this type of data drives buyer confidence and activity, as it sheds a detailed light on what exactly is being auctioned and eventually bought. Advertisers and brands generally know who they want to reach, and often have a sense of the context in which they want their ads to appear. Absent this type of detailed information, media buyers may be reluctant to purchase ad inventory blindly, and will likely seek alternative options where data transparency is readily-available.

Not to sound clichė, but data transparency — simply put — is what fuels trust and transaction activity in programmatic marketplaces. Data transparency provides much-needed assurances, while also unlocking intriguing ad personalization options. These in turn encourage spend, lead to greater trading efficiency and volume, and ultimately help media buyers and sellers drive marketing impact and inventory yield (respectively).

But the picture isn’t always this straightforward or rosy. Data can be messy, after all.

Why Data Cleanliness (and Normalization) in the Bidstream is Key.

When qualifying data is sourced from hundreds of different sellers (all of whom likely have proprietary databases and hierarchies), it becomes very difficult for media buyers to piece it all together.

One seller’s “Home & Garden” might be another’s “Home / Garden”,” Home + Graden”, or “House & Gardening,” to give an example. This might not seem like that big of a deal as you read it, but when considering this metadata is generally fed into algorithms that inform media buying decisions, it becomes clear why it’s critical to have clean and accurate flowing through the bidstream.

If it is challenging for programmatic SaaS platforms to understand and package important metadata (in real time), then media buyers become less likely and inclined to purchase inventory.

Activating a single, scaled “Home & Garden'' PMP is a much easier ask than attempting to stitch together several “Home & Garden” permutations at check-out — each with their own unique combinations of symbols and spelling errors. And on the flip side, getting each media seller to align on hierarchies and consistent nomenclature takes time. Standardized taxonomies certainly help, but our industry moves fast and ad operations teams all have their own priorities, strategies, and objectives.

This is where the concept of data normalization comes into play. Data normalization is a process by which redundant, inaccurate, and duplicate values and inputs are combed, sorted and organized to arrive at “normal” values, unlocking scale through consolidation while ensuring high levels of data fidelity.

We’ve spent quite a bit of time at Beachfront building normalization engines that help to solve these exact types of challenges for our media owner and programming partners. To give an example specific to content metadata,

- Our technology normalizes all of the Genres that we receive from partners via the IAB openRTB content object (a request specification).

- We receive more than 5,000 individual content data points in total, all generally variations and permutations of each other, like the Home & Garden example alluded to earlier.

- Our Normalization Engine categorizes popular and frequently-received values, while outliers are manually combed to continually update and inform our engines over time.

- The end result allows us to provision high-fidelity targeting across more than 70+ rich contextual targets — including sports, home and garden, food and cooking, and much, much more.

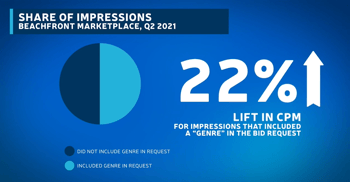

Data normalization benefits both media buyers and sellers. The former receives scalable contextual targeting options that deliver more relevant ads, while the latter is set to earn premiums through greater inventory transparency and pooling (which drives scale). In fact, in Q2 of 2021, our marketplace received a Content Genre on roughly half of the CTV impressions we delivered. Those impressions that included a Normalized Genre in the bid request saw a 22% lift in CPM pricing over those that did not.

Sharing valuable data in the bidstream is a simple way for media sellers to drive immediate yield and revenue lifts, and normalization engines play a vital role in ensuring that this data is accurate, clean, and transactable.

Lest We Forget About Data Protection.

We can’t talk about data without also discussing privacy and protection. First-party data is an immensely valuable proprietary asset for programmers and media owners — and it should be treated as such. Historically, however, the tool sets needed to simultaneously monetize and protect first-party in a privacy-conscious manner haven’t existed. Data leakage remains a key issue today, especially in elongated supply chains where unnecessary intermediaries are sometimes privy to sensitive and proprietary first-party data in the bidstream.

As media owners look to monetize first-party data more consistently moving forward to drive buying activity and yield, it’s key their ad operations teams are equipped with solutions to better control what gets shared in the bidstream (and with whom). Configurable settings for both audience and content metadata — which Beachfront readily offers via our self-service Deals Engine module — empowers these teams to control, obfuscate, and even remove data from being shared in the bidstream.

The Time to Revisit First-Party Data Strategies is Now.

Our collective industry is undoubtedly at a major inflection point following the events of the last year plus. Viewing habits, business models, and monetization priorities are changing. Not to mention, privacy legislation and internet browsers continue to evolve as well.

There are benefits to be gained among media owners who have a robust, privacy-conscious data strategy in place — one that safeguards valuable first-party data, while allowing it to be monetized via some of the applications I shared above.

The time is right to re-evaluate and revisit your data strategies, and Beachfront is here to help. To learn more about our Deals Engine, and to get started, visit here.